马斯克:今年搞定L5级自动驾驶基本功能,正组建中国研发团队

- 时间:2020-07-11 20:52

- 来源:车东西

- 作者:车东西

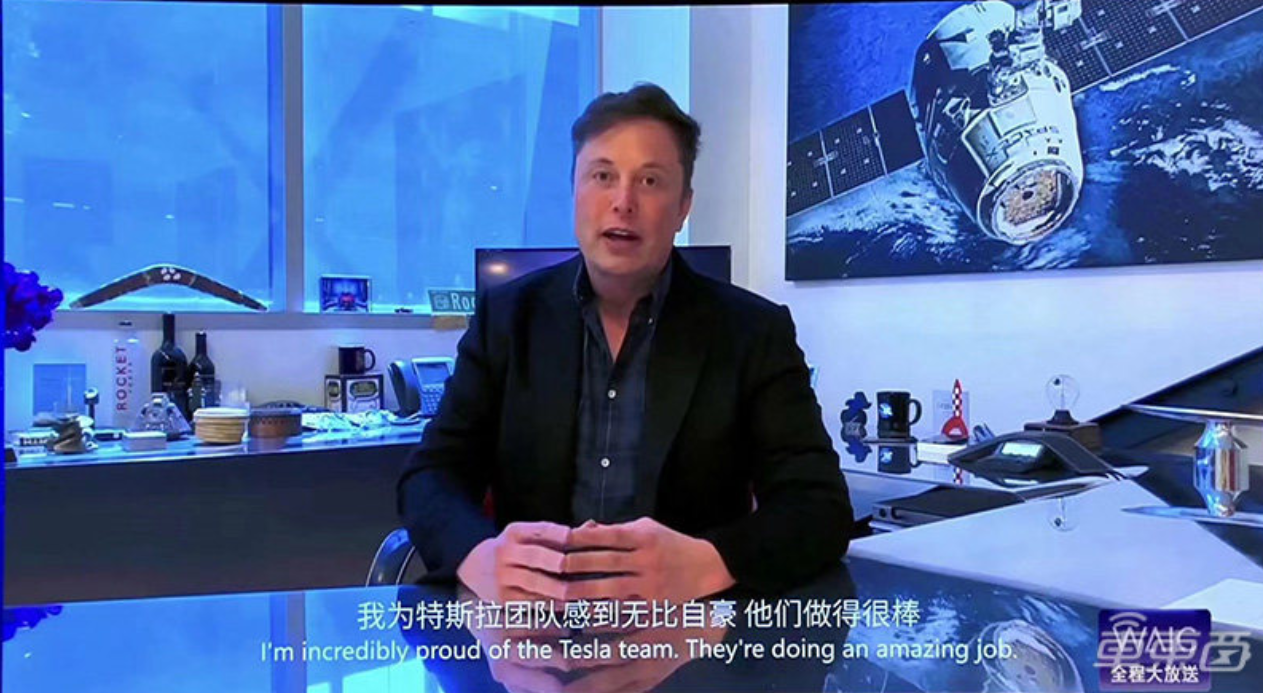

月9日消息,今天上午,2020年世界人工智能大会在上海正式开幕。受新冠肺炎疫情影响,今年的世界人工智能大会的受邀嘉宾大部分都通过视频通话参加。马斯克作为受邀嘉宾之一,在今天上午通过线上视频的方式参加本届世界人工智能大会。

马斯克线上参加世界人工智能大会

马斯克线上参加世界人工智能大会

马斯克在采访中透露,在自动驾驶和电动汽车方面,特斯拉正在中国地区组建工程团队,这一团队将针对中国的道路进行自动驾驶研发,让自动驾驶不断进步。此外,马斯克宣布,特斯拉将在今年完成L5级自动驾驶基本功能的研发工作,并且特斯拉的L5级自动驾驶系统会更加安全。

在人工智能方面,特斯拉的Autopilot自动驾驶芯片通过降低芯片功耗,达成很高的识别准确度。由于特斯拉自动驾驶芯片HardWare 3.0性能非常强劲,目前也只发挥了其部分运算能力。要充分使用HardWare 3.0的性能,恐怕还需要一年左右的时间。

另外,特斯拉上海工厂建成后,也运用更多人工智能软件优化车辆生产流程,今后还会创造更多就业。

一、马斯克线上参加人工智能大会 特斯拉已非常接近L5级自动驾驶

7月9日上午,2020年世界人工智能大会在上海开幕,马斯克作为受邀嘉宾线上出席这次大会,并接受采访。

世界人工智能大会现场

世界人工智能大会现场

在采访中,马斯克在自动驾驶、人工智能技术两个方面阐述了特斯拉的最新进展。他表示,特斯拉将在今年基本实现L5级自动驾驶技术基本功能的研发工作。

针对自动驾驶技术,马斯克表示,目前特斯拉自动辅助驾驶在中国市场应用还不错。

不过,由于特斯拉自动驾驶的工程开发集中在美国加州,所以自动辅助驾驶功能在美国的应用的更好,在加州最好。

因此,为适应各国各地区不同的交通状况,目前特斯拉正在中国建立自动驾驶工程团队。在中国,还要进行许多原创性的工程开发,并且特斯拉现在已经开始招聘优秀的研发工程师。

对于高级自动驾驶技术,马斯克表示,他对L5级自动驾驶技术非常有信心,将在今年完成开发L5级自动驾驶系统的基本功能。

他表示,L5级自动驾驶系统最困难的地方在于安全级别需要更高,如果仅达到人类驾驶的安全水平远远不够。

针对AI芯片的发展,马斯克表示,Autopilot自动辅助驾驶芯片推动了AI芯片的发展。而特斯拉之所以自研芯片,就是因为市面上算力强的芯片功耗高,功耗低的芯片,算力实在不行。

他表示:“如果我们使用传统的GPU, CPU或其他相似的产品,将耗费数百瓦的功率,并且后备箱会被计算机,GPU巨大的冷却系统占据,由此一来成本高昂,占用车辆体积,而且高耗能。要知道能耗对于电动汽车的行驶里程很关键。”

在特斯拉上海工厂建成后,特斯拉在上海超级工厂也进行了许多人工智能的应用,提高生产效率。

马斯克表示,预计未来上海工厂将有更多人工智能和更加智能化的软件。

随着人工智能技术不断进步,机器需要更多工程师来开发,未来也能创造更多就业。

二、附:马斯克在大会上接受采访原文中文实录(未经本人核实)

主持人:Elon您好!虽然今天您无法到上海现场,但是很高兴能够通过视频连线,再次与您在世界人工智能大会相会。

马斯克:感谢邀请。再次参加大会太好了。我非常期待未来有机会能可以亲自来到现场。

Q:那就让我们直接切入正题吧,有几个问题想与您探讨。首先,我们都知道,Autopilot 自动辅助驾驶是特斯拉纯电动车非常受欢迎的一项功能。它在中国市场的应用情况如何?

A:特斯拉自动辅助驾驶在中国市场应用还不错。但因为我们与自动驾驶相关的工程开发集中在美国,所以自动辅助驾驶功能在美国的应用的更好,在加州最好,这也主要是因为我们相关的工程师在加州。在我们确定这项功能在加利福尼亚运作良好后,我们会将其推送到世界其他地区。目前我们正在中国建立相关的工程团队。如果你想成为特斯拉中国的工程师,我们会非常欢迎,这将会非常好。

我想强调下,在中国我们要做的是进行很多原创性的工程开发。所以并不是简单的将美国的东西直接照搬到中国,而是就在中国进行原创的设计和原创的工程开发。所以,如果您考虑工作,请考虑在特斯拉中国工作。

Q:您对于我们最终实现L5级别自动驾驶有多大信心?您觉得这一天什么时候会到来?

A:我对未来实现L5级别自动驾驶或是完全自动驾驶非常有信心,而且我认为很快就会实现。

在特斯拉,我觉得我们已经非常接近L5级自动驾驶了。我有信心,我们将在今年完成开发L5级别的基本功能。对于L5级别自动驾驶,需要考虑相对于人类驾驶,实际道路可接受的安全级别是多少?达到人类驾驶安全性的两倍就足够了吗?我不认为监管机构会认可L5级别自动驾驶达到与人类驾驶员同等的安全性是足够的。

问题是,L5级别自动驾驶的安全性需要达到要求的两倍,三倍,五倍,还是十倍?因此,你可以将L5级别自动驾驶的安全性想像成9的序列。像需要99.99%安全性还是99.99999%?您想要几个9?可接受的水平是多少?然后,需要多少数据量才能使监管者确信该数据足够安全?我认为,如果要问到有关自动驾驶L5级别的实际深入问题,这些是一定会被提及的。

我认为实现自动驾驶L5目前不存在底层的根本性的挑战,但是有很多细节问题。我们面临的挑战就是要解决所有这些小问题,然后整合系统,持续解决这些长尾问题。你会发现你可以处理绝大多数场景的问题,但是又会不时出现一些奇怪不寻常的场景,所以你必须有一个系统来找出并解决这些奇怪不寻常场景的问题。这就是为什么你需要现实世界的场景。没有什么比现实世界更复杂了。我们创建的任何模拟都是现实世界复杂性的子集。

因此,我们目前非常专注于处理L5级别自动驾驶的细节问题上。并且我相信这些问题完全可基于特斯拉车辆目前搭载的硬件版本来解决,我们只需改进软件,就可以实现L5级别自动驾驶。

Q:您觉得人工智能和机器人技术的三大支柱:感知、认知和行为,目前在各自领域的进展如何?

A:我不确定人工智能技术是否可以这样分类。如果按照这个分类标准,在感知层面,以识别物体为例,目前的技术取得了巨大进展。 可以说,即便是在专业领域,当今的高级图像识别系统也比人类都要好。

问题的实质在于需要多强的计算能力,多少计算机和多长计算时间来训练感知能力?图像识别训练系统的效率如何?就图像识别或声音识别而言,对于给定的字节流,人工智能系统能否准确识别处理? 答案是非常好。

认知可能是最薄弱的领域,人工智能是否可以理解概念?是否会有效推理?能否创造有意义的事物?目前有很多非常有创造力的技术先进的人工智能,但是它们无法很好地控制其创造活动。至少现在在我们看来不太对,不过未来它会看起来像样些。

然后是行为。这个可以以游戏打比方。在任何规则明确的游戏中,或者自由发挥空间比较有限的游戏,人工智能就像超人类一样。就目前而言,很难想像有什么游戏,人工智能游戏玩家不能发挥超人类水平的,这甚至都不去考虑到人工智能更快的反应时间。

Q:Autopilot自动辅助驾驶在哪些方面推动了AI算法和芯片的发展?它又如何改变了我们对AI技术的理解?

A:在为自动辅助驾驶开发人工智能芯片时,我们发现市场上没有成本合理且低功耗的系统。如果我们使用传统的GPU, CPU或其他相似的产品,将耗费数百瓦的功率,并且后备箱会被计算机,GPU巨大的冷却系统占据,由此一来成本高昂,占用车辆体积,而且高耗能。要知道能耗对于电动汽车的行驶里程很关键。

为此我们开发了特斯拉自有的人工智能芯片,即具有双系统的特斯拉完全自动驾驶电脑,该芯片具有8位元和加速器,用于点积运算。在座各位可能有很多人都有所了解,人工智能包含很多点积运算,如果你知道什么是点积运算,那么便知道点积运算量巨大,这意味着我们的电脑必须做很多点积运算。我们事实上还未完全发挥出特斯拉完全自动驾驶电脑的能力。实际上,几个月前我们才审慎地启动了芯片的第二套系统。充分利用特斯拉完全自动驾驶电脑的能力,可能还需要至少一年左右的时间。

我们还开发了特斯拉Dojo训练系统,旨在能够快速处理大量视频数据,以改善对人工智能系统的训练。Dojo系统就像一个FP16训练系统,主要受芯片的发热量和通讯的速率的限制。所以我们也正在开发新的总线和散热冷却系统,用于开发更高效的计算机,从而能更有效处理视频数据。

我们是如何看待人工智能算法的发展呢?我不确定这是不是最好的理解方式,神经网络主要是从现实中获取大量信息,很多来自无源光学方面,并创建矢量空间,本质上将大量光子压缩为矢量空间。我今天早上开车的时候还在想,人们是否能够进入大脑中的矢量空间呢?我们通常以类比的方式,将现实视为理所当然。但我认为,其实你可以进入自己大脑中的矢量空间,并了解你的大脑是如何处理所有外部信息的。事实上它在做的是记忆尽可能少的信息。

它获取并过滤大量信息,只保留相关的部分。那人们是如何在大脑中创建一个矢量空间呢?它的信息仅占原始数据很小一部分,却可以根据这个矢量空间的表达做决策。这实际上就类似一个大规模的压缩和解压缩的过程,有点像物理学,因为物理学公式本质上是对现实的压缩算法。

这便是物理学的作用。很明显,物理公式是现实的压缩算法。简言之,我们人类就是物理学作用的证据。如果你对宇宙做一个真正物理学意义上的模拟,就需要大量的计算。如果有充足时间,最终会产生觉知。人类便是最佳证明。如果你相信物理学和宇宙的演化史,便知道宇宙一开始是夸克电子,很长一段时间是氢元素,然后出现了氦和锂元素,接着出现了超新星。重元素在数十亿年后形成,其中一些重元素学会了表达。那就是我们人类,本质上由氢元素进化而来。若将氢元素放一段时间,它就会慢慢转变为我们。我觉得大家可能不太赞成这一点。所以有人会问,specialist的作用是什么?觉知的作用又是什么?整个宇宙是一种特殊的觉知或者不存在特殊性?又或者,在氢元素转变为人类的过程中何时产生了知觉?

Q:最后一个问题。祝贺特斯拉今年出色的业绩,我们也想知道,特斯拉上海超级工厂目前的进展怎么样?在上海超级工厂有没有一些制造业相关的AI应用?

A:谢谢,特斯拉上海工厂进展顺利,我为特斯拉团队感到无比自豪,他们做得很棒! 我期待能尽快访问上海超级工厂,他们出色地工作确实让我深感欣慰。我不知道该如何表达,真的非常感谢特斯拉中国团队。

预计未来我们的工厂中会运用更多的人工智能和更智能化的软件。但我认为在工厂,真正有效地使用人工智能还需要花费一些时间。你可以将工厂看作一个复杂的集合体,控制论集合体,其中涉及人也涉及机器。实际上所有公司都是如此,但特别是制造业企业或者至少是制造业企业中,机器人控制部分要更为复杂。 所以有意思的是,随着人工智能不断发展,可能将会创造更多就业,甚至是否还需要工作也是不一定的。

主持人:再次感谢您参加世界人工智能大会,也感谢您的精彩分享,我们期待着明年的大会能在现场见到您!

马斯克:谢谢您的线上采访。 我希望明年能有机会能亲自参加,我很喜欢到中国。 中国总是给我惊喜,中国有很多既聪明又勤奋的人,中国充满了正能量,中国人对未来满怀期待。我会让未来成为现实,所以我非常期待再次回来。

三、附:马斯克在大会上接受采访原文英文实录

主持人:Hello, Elon. Even though you cannot be in Shanghai right now, it’s nice to have you at the 2020 world artificial intelligence conference over video.

马斯克:Thanks for having me. Yes, but it is great to be here again. I look forward to attending in person in the future.

Q:Great. Let’s get started with a couple of questions. First, in terms of Tesla products, we know that Autopilot is one of its most popular features. How does it work in China?

A:Tesla Autopilot does work reasonably well in China. It does not work quite as well in China as it does in the US because still most of our engineering is in the US so that tends to be the local group of optimization. So Autopilot tends to work the best in California because that is where the engineers are. And then once it works in California, we then extend it to the rest of the world. But we are building up our engineering team in China. And so if you’re interested in working at Tesla China as an engineer, we would love to have you work there. That will be great.

I really want to emphasize it is a lot that we are going to be doing original engineering in China. It’s not just converting sort of stuff from America to work in China, we will be doing original design and engineering in China. So please do consider Tesla China, if you’re thinking about working somewhere.

Q:Great. How confident are you that level five autonomy will eventually be with us? And when do you think we will reach full level five autonomy?

A:I’m extremely confident that level five or essentially complete autonomy will happen, and I think will happen very quickly.

I think at Tesla, I feel like we are very close to level five autonomy.I think I remain confident that we will have the basic functionality for level five autonomy complete this year. The thing to appreciate for level five autonomy is what level of safety is acceptable for the public streets relative to human safety? And then, so is it enough to be twice as safe as humans? Like I do not think that the regulators will accept equivalent safety to humans.

So the question is, will it be twice as safe as a requirement, three times as safe, five times as safe, 10 times as safe? So you can think of really level five autonomy as kind of like a march of 9s. Like do you have 99.99% safety? 99.99999%? How many 9s do you want? what is the acceptable level? And then what amount of data is required to convince regulators that it is sufficiently safe? Those are the actual in-depth questions, I think, to be asking about level five autonomy. That it will happen is a certainty.

So yes, I think there are no fundamental challenges remaining for level five autonomy. There are many small problems. And then there’s the challenge of solving all those small problems and then putting the whole system together, and just keep addressing the long tail of problems. So you’ll find that you’re able to handle the vast majority of situations. But then there will be something very odd. And then you have to have the system figure out a train to deal with these very odd situations. This is why you need a kind of a real world situation. Nothing is more complex and weird than the real world. Any simulation we create is necessarily a subset of the complexity of the real world.

So we are really deeply enmeshed in dealing with the tiny details of level five autonomy. But I’m absolutely confident that this can be accomplished with the hardware that is in Tesla today, and simply by making software improvements, we can achieve level five autonomy.

Q:Great. If we look at the three building blocks of AI and robotics: perception, cognition, and action, how would you assess the progress respectively so far?

A:I am not sure I totally agree with dividing it into those categories: perception, cognition, and action. But if you do use those categories, I’d say that probably perception we’ve made, if you can say like the recognition of objects, we’ve made incredible progress in recognition of objects. In fact, I think it would probably fair to say that advanced image recognition system today is better than almost any human, even in an expert field.

So it is really a question of how much compute power, how many computers were required to train it? How many compute hours? What was the efficiency of the image training system? But in terms of image recognition or sound recognition, and really any signal you can say, generally speaking any byte stream, Can an AI system recognize things accurately with a given byte stream? Extremely well.

Cognition. This is probably the weakest area. Do you understand concepts?Are you able to reason effectively? And can you be creative in a way that makes sense? You have so many advanced AIs that are very creative, but they do not curate their creative actions very well. We look at it and it is not quite right. It will become right though.

And then action, sort of like things like games, as maybe something part of the action part of thing. Obviously at this point, any game with rules, AI will be superhuman at any game with an understandable set of rules, essentially any game below a certain degree of freedom level. Let us say at this point, any game, it would be hard-pressed to think of a game where if there was enough attention paid to it, that we would not make it superhuman AI that could play it. That’s not even taking into account the faster reaction time of AI.

Q:In what ways does Autopilot stimulate the development of AI algorithms and chips? And how do you does it refresh our understanding of AI technology?

A:In developing AI chips for Autopilot, what we found was that there was no system on the market that was capable of doing inference within a reasonable cost or power budget. So if we had gone with a conventional GPUs, CPUs and that kind of thing, we would have needed several hundred watts and we would have needed to fill up the trunk with computers and GPUs and a big cooling system. It would have been costly and bulky and have taken up too much power, which is important for range for an electric car.

So we developed our own AI chip, the Tesla Full Self-Driving computer with dual system on chips with the eight bit and accelerators for doing the dot products. I think probably a lot of people in this audience are aware of it. But AI consists of doing a great many dot products. This is like, if you know what a dot product is, it’s just a lot of dot products, which effectively means that our brain must be doing a lot of dot products. We still actually haven’t fully explored the power of the Tesla Full Self-Driving computer. In fact, we only turned on the second system on chip harshly a few months ago. So making full use of Tesla Full-Self Driving computer will probably take us at least another year or so.

Then we also have the Tesla Dojo system, which is a training system. And that’s intended to be able to process fast amounts of video data to improve the training for the AI system. The Dojo system, that’s like an fp16 training system and it is primarily constrained by heat and by communication between the chips. We are developing new buses and new sort of heat projection or cooling systems that enable a very high operation computer that will be able to process video data effectively.

How do we see the evolution of AI algorithms? I’m not sure how the best way to understand it, except that neural net seems to mostly do is to take a massive amount of information from reality, primarily passive optical, and create a vector space, essentially compress a massive amount of photons into a vector space. I am just thinking actually on the drive this morning, have you tried accessing the vector space in your mind? Like we normally take reality just granted in kind of analog way. But you can actually access the vector space in your mind and understand what your mind is doing to take in all the world data. What we actually doing is trying to remember the least amount of information possible.

So it’s taking a massive amount of information, filtering it down, and saying what is relevant. And then how do you create a vector space world that is a very tiny percentage of that original data? Based on that vector space representation, you make decisions. It is like a really compression and decompression that is just going on a massive scale, which is kind of how physics is like. You think of physics out physics algorithms as essentially compression algorithms for reality.

That is what physics does. Those physics formulas are compression algorithms for reality, which may sound very obvious. But if you simplify what it means, we are the proof points of this. If you simply ran a true physics simulation of the universe, it also takes a lot of compute. If you are given enough time, eventually you will have sentience. The proof of that is us. And if you believe in physics and the arches of the universe, it started out as sort of quarks electrons. And there was hydrogen for quite a while, and then helium and lithium. And then there were supernovas, the heavy elements formed billions of years later, some of those heavy elements learned to talk. We are essentially evolved hydrogen. If you just leave hydrogen out for a while, it turns into us. I think people don’t quite appreciate this. So if you say, where does the specialist come in? Where does sentience come in? The whole universe is sentience special or nothing is? Or you could say at what point from hydrogen to us did it become sentient?

Q:Great. Our last question, congratulations on an incredible year so far at Tesla. How are things going at Gigafactory Shanghai? Is there any application of AI to manufacturing specifically at Giga Shanghai?

A:Thank you. Things are going really well at Giga Shanghai. I’m incredibly proud of the Tesla team. They’re doing an amazing job. And I look forward to visiting Giga Shanghai as soon as possible. It’s really an impressive work that’s been done. I really can’t say enough good things. Thank you to the Tesla China team.

We expect over time to use more AI and essentially smarter software in our factory. But I think it will take a while to really employ AI effectively in a factory situation. You can think of a factory as a complex, cybernetic collective involving humans and machines. This is actually how all companies are really, but especially manufacturing companies, or at least the robot component of manufacturing companies is much higher. So now that interesting thing about this is that I think over time there will be both more jobs and having jobs will be optional.

One of the false premises sometimes people have about economics is that there’s a finite number of jobs. There is definitely not a finite number of jobs. An obvious, reductive example would be if you had the populations increased tenfold in a century, If there’s a finite number of jobs and 90% of people would be unemployed? Or think of the transition from an agrarian to an industrial society where at an agrarian society, 90% people or more would be working in the farm. Now we have 2% or 3% of people working in the farm. So at least the short to medium term, my biggest concern about growth is being able to find enough humans. That is the biggest constraint in growth.

主持人:Thanks again you on for your time and joining us at this year’s world artificial intelligence conference. We hope to see you next year in person.

马斯克:Thank you for having me in virtual form. I look forward to visiting physically next year, and I always enjoy visiting China. I am always amazed by how many smart, hardworking people that are in China and just that how much positive energy there is, and that people are really excited about the future. I want to make things happen. I cannot wait to be back.

(责任编辑:约翰)